Why Did mRNA Vaccines, Innovative Alzheimer's Research, And Nobel Prize Winners Get Rejected? → How Our Scientific System Is Costing Lives

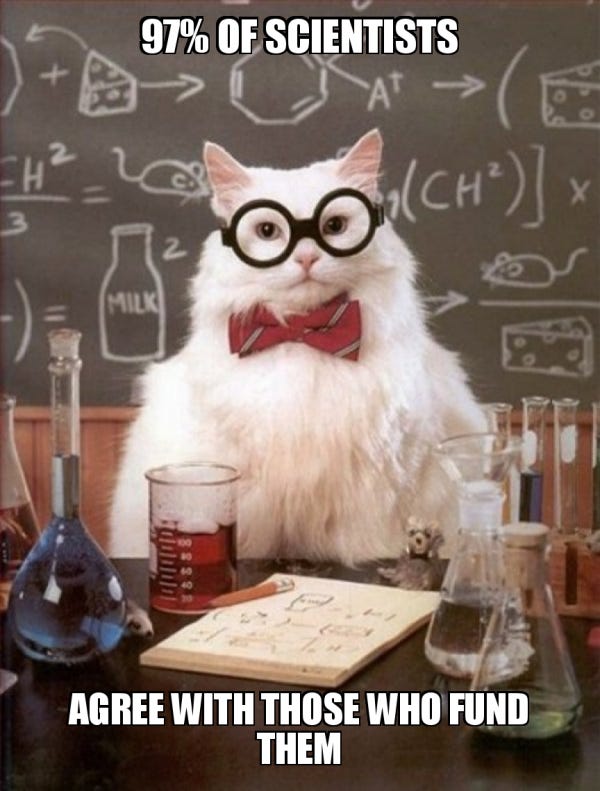

Science funding rewards consensus. Breakthroughs come from disagreement. We have a problem.

A meteorologist first proposed the controversial idea that the continents were moving slowly. Geologists ridiculed him for decades. The meteorologist was right.

Germ theory also came from an outsider to the medical field. A microbiologist fought doctors who insisted "bad air" caused disease. The medical establishment resisted for years, partly because doctors didn't like being told they were spreading disease with their own hands.

Science has always had to deal with the scientific community protecting pre-existing worldviews. This makes progress a lot harder: every major leap forward requires someone willing to challenge the consensus.

But past scientists didn’t have to deal with today’s formalized peer review, a hierarchy of scientific journals, and funding committees.

This matters because, as Experimental History’s Adam Mastroianni puts it, "Remember that it used to be obviously true that the Earth is the center of the universe, and if scientific journals had existed in Copernicus' time, geocentrist reviewers would have rejected his paper."

You might think these kinds of big mistakes can’t happen today. But they do.

The mRNA technology behind the COVID-19 vaccines got rejected from both Nature and Science (the top two scientific journals) in 2005 because the work was “not novel” and “not of interest to the broad readership.” The lead scientist struggled to get funding for mRNA research due to skepticism from the established community. Her university even told her to stop working on mRNA or be demoted. She chose demotion.

mRNA vaccines saved millions of lives. Without accelerated approval due to COVID-19, the technology likely would have languished for decades more.

Something similar happened with Alzheimer research.

As recently as 2019, no scientist could advance in Alzheimer's research without accepting the dominant paradigm, that one specific protein was the culprit. Research on whether viruses, immune responses, gut, or other things impacted Alzheimer’s went unfunded. Researchers faced career-ending obstacles if they questioned the “beta-amyloid protein theory”. Journals wouldn't publish papers with alternative ideas if other journals hadn't done so first. Funding required researchers to at least pretend their research supported the leading hypothesis. Conferences wouldn't give speaking slots to dissenting research. Even venture capital and pharmaceutical companies would only back amyloid approaches.

This tunnel vision appears to have set back Alzheimer's research for five to ten years.

Other Nobel Prize winners experienced similar obstacles. A 2011 Physics Nobel Prize winner said his work wouldn't get funded today—review committees consistently responded with skepticism. Nobel Prize-winning papers on how cells produce energy (the “Krebs cycle”) and the Higgs Boson were initially rejected because they didn't seem important.

An outsider to the STEM field, anthropologist Dr. Lisa Messeri, pointed this tendency out during an interview for my Bulletin of Atomic Scientists piece on what AI can and can't do to accelerate science. In sum, funding and review committees are built to incrementally expand what we already know, not fund the weird work that shifts paradigms and solves big problems. We don't fund or support high-risk-of-failure, high-payoff research because it performs poorly in consensus-based committees.

Current AI systems can accelerate some processes in science, like reviewing papers and analyzing promising research threads. But the bigger science system isn’t nurturing paradigm shifts.

The current funding system appears to be based on the outdated idea that science is a series of “discoveries” that get us closer to the right answer. But this is wrong. The path isn’t linear. Dr. Messeri explains that "fields tend to progress through ruptures - almost like a train skipping off one track and on to another." As Thomas Kuhn showed in the "The Structure of Scientific Revolutions," paradigms bring entirely new foundations that overturn how we see the world.

Like how Einstein’s theory of relativity showed time and space could bend when all the pre-existing literature said the two were fixed.

Einstein published his heretical research anyway because peer review didn’t exist back then. The one time a journal asked him to submit for peer review, he failed.1

Einstein would have struggled a lot in today’s environment.

We've optimized science for consensus, but breakthroughs come from disagreement.

We've built a system that would have stopped Einstein. We shouldn’t be surprised that breakthrough science feels so rare.

More people are starting to notice this problem. Institute for Progress co-CEO Caleb Watney suggests giving reviewers 'golden tickets’, letting each reviewer champion one controversial project each. If some experts love something and others hate it, that disagreement might be a good sign. Or a portfolio approach—set aside 20% of research funding for work that splits expert opinion or looks promising to some but gets rejected because most think it won't work.

It's not a perfect fix, but it's a start.

What breakthrough idea do you think is getting suppressed right now? And if you're a scientist reading this - what's the contrarian research you wish you could pursue?

What breakthrough idea do you think is getting suppressed right now? And if you're a scientist reading this - what's the contrarian research you wish you could pursue?

It’s not exactly what you were asking about, but I have become really concerned about the widespread abuse of statistics in the social sciences. There are a few excellent papers pointing out serious errors in widespread practice, but the vast majority of researchers blithely continue to use incorrect methods. The real problem is that there is no incentive for people to get this stuff right – if nearly everyone is making the same error, it doesn’t stop results being published.

An area where this particularly concerns me is meta-analysis; there are specific issues there which mean that the widespread reliance on meta-analyses as the highest standard of evidence is shakier than is widely realised.